Artificial intelligence (AI) is everywhere. It’s in our newsfeeds, in our boardroom conversations, and yes, even in our phones asking us if we meant to say “ducking.” But with all the noise, one question keeps bouncing around: is AI truly transformative, or are we all riding another overhyped tech wave? Depending on who you ask, generative AI is either the dawn of a golden era or the digital version of snake oil.

This article doesn’t promise you a crystal ball. What it does promise is clarity. We’re diving deep into what AI technology really is today — not the sci-fi stuff, but the real, gritty, business-impacting kind. We’ll explore how generative AI is changing the way we work, what traps to avoid, and how to think more clearly about its role in your organization.

Would you like to go further? Watch the full replay of the N2 webinar ‘AI and data governance: how to harness the power of your enterprise data’, hosted by Frédéric Etheve, ex-COO of OVH Cloud and now part-time Tech Exec, and Nicolas Delaby, co-founder and CEO of N2 Help & Solutions.

AI: Between Hype Cycles and Human Hope

AI is one of those rare technologies that sits at the crossroads of emotion and intellect. For some, it’s an existential threat; for others, a golden ticket to operational nirvana. The reality? It’s both — and neither.

Like electricity or the internet before it, AI has become a Rorschach test for society’s ambitions and anxieties. Some see liberation from mundane tasks, others fear a dystopia of jobless futures. And yet, most of us fall somewhere in the middle — cautiously optimistic, but skeptical of the overblown promises.

The trick is recognizing that AI isn’t one monolithic thing. It’s a spectrum. It ranges from basic automation (think spam filters) to mind-bending generative AI models (hello, ChatGPT). And understanding where a technology sits on that spectrum is key to using AI tools wisely.

From Dotcom to AI: Are We Repeating History?

Let’s rewind to the late ’90s. The internet was the Wild West, and every company was slapping a “.com” on their name and watching their valuations soar. Fast forward a few years — the bubble burst, fortunes evaporated, but the core technology? It stayed. It evolved. It changed everything.

Sound familiar?

Today, generative AI sits at a similar crossroads. Investors are throwing billions at startups promising the moon. Some AI tools deliver jaw-dropping capabilities; others, not so much. But here’s the kicker — just like the internet, AI is not going away. The froth might fade, but the foundations are solid.

So yes, there may be a bubble — in valuation, in hype, in breathless media headlines. But that doesn’t mean AI itself is hollow. It means we’re still learning how to use it right, how to integrate it meaningfully, and how to make sure it solves real problems rather than just impressing the C-suite.

The Rise of Accessible AI

Once upon a time, if you wanted to experiment with AI, you needed a PhD in machine learning and a server room that sounded like a wind tunnel. Today? All you need is a smartphone and a curious mind.

The rise of AI tools like ChatGPT, DALL·E, and Claude has changed the AI landscape completely. Generative AI, especially large language models (LLMs) using deep learning and natural language processing, has put powerful capabilities into the hands of everyday users. From writing emails to brainstorming startup ideas, AI is no longer reserved for labs — it’s in your pocket.

And it’s not just about fun and productivity hacks. This accessibility marks a pivotal shift. When a technology becomes mainstream, it creates room for innovation at scale. More people experimenting means more unexpected, impactful AI applications. That’s how revolutions happen — not in ivory towers, but in kitchens, classrooms, and corner offices.

Data: The Hidden Power Behind AI

If AI is the engine, data is the fuel. And not just any fuel — think rocket-grade, high-octane stuff. Because at the core of every successful AI model is a mountain of data, meticulously processed, tagged, cleaned, and structured.

Most AI systems — from fraud detection to content recommendation — rely on spotting patterns in vast data sets. These patterns help the system “learn” and predict. But here’s the twist: the quality of what you get out of AI is entirely dependent on the quality of the data you put in. Garbage in, garbage out, as they say.

This is where many businesses stumble. They get excited about the AI promise but forget the plumbing — the data infrastructure that makes AI work. Before you plug in an algorithm, you need to ask: do we even have the right data? Is it clean? Is it current? Is it complete?

Data Governance: The Unsung Hero

Let’s talk governance. No, not the kind involving politicians and suits. We’re talking data governance — the unsung hero behind successful AI projects. Think of it as the rulebook for your organization’s data: who owns it, who accesses it, how it’s stored, and how it’s protected.

Without solid data governance, AI is like a race car with no steering wheel. You might go fast, but you have no control — and that’s a disaster waiting to happen. Data governance ensures that data is reliable, secure, and available to the right people at the right time.

Effective data governance also builds trust. When people know the data is accurate and protected, they’re more likely to use it. And when AI systems are built on that trusted foundation, they become more effective — and far less risky. It’s not flashy, but it’s foundational. And it’s what separates real transformation from digital chaos.

Aligning AI Use Cases with Data Maturity

Imagine trying to race a Formula 1 car on a muddy dirt track. That’s what deploying advanced AI on immature data systems feels like. One of the biggest mistakes companies make is misaligning their AI ambitions with their actual data readiness.

Data maturity isn’t just about how much data you have. It’s about how well you manage it. Is it structured? Is it centralized? Do your teams know where it is and how to use it? Organizations with mature data governance can take on more complex AI use cases. Those without? They should start small — and smart.

This alignment matters because AI systems, especially those making predictions or decisions, need reliable data to work effectively. When data is inconsistent, incomplete, or scattered across silos, the output becomes unreliable, sometimes even dangerous. Think of data maturity as your AI foundation — get that right, and the rest becomes a lot easier.

Business First: Avoiding the Tech-First Trap

Here’s a common scenario: a company hears about a cool new generative AI tool and rushes to implement it — only to realize later it doesn’t solve any real problem. Sound familiar?

Tech-first thinking is seductive. The demos are impressive. The possibilities seem endless. But without a clear business case, AI becomes a flashy expense rather than a strategic asset. The key? Flip the script. Start with the business need — not the tech.

Want to reduce customer churn? Improve forecasting? Streamline supply chains? Cool. Define those goals clearly, then explore how AI can help. When you lead with business value, AI becomes a tool for transformation. When you lead with hype, it becomes a distraction.

The AI Use Case Ladder

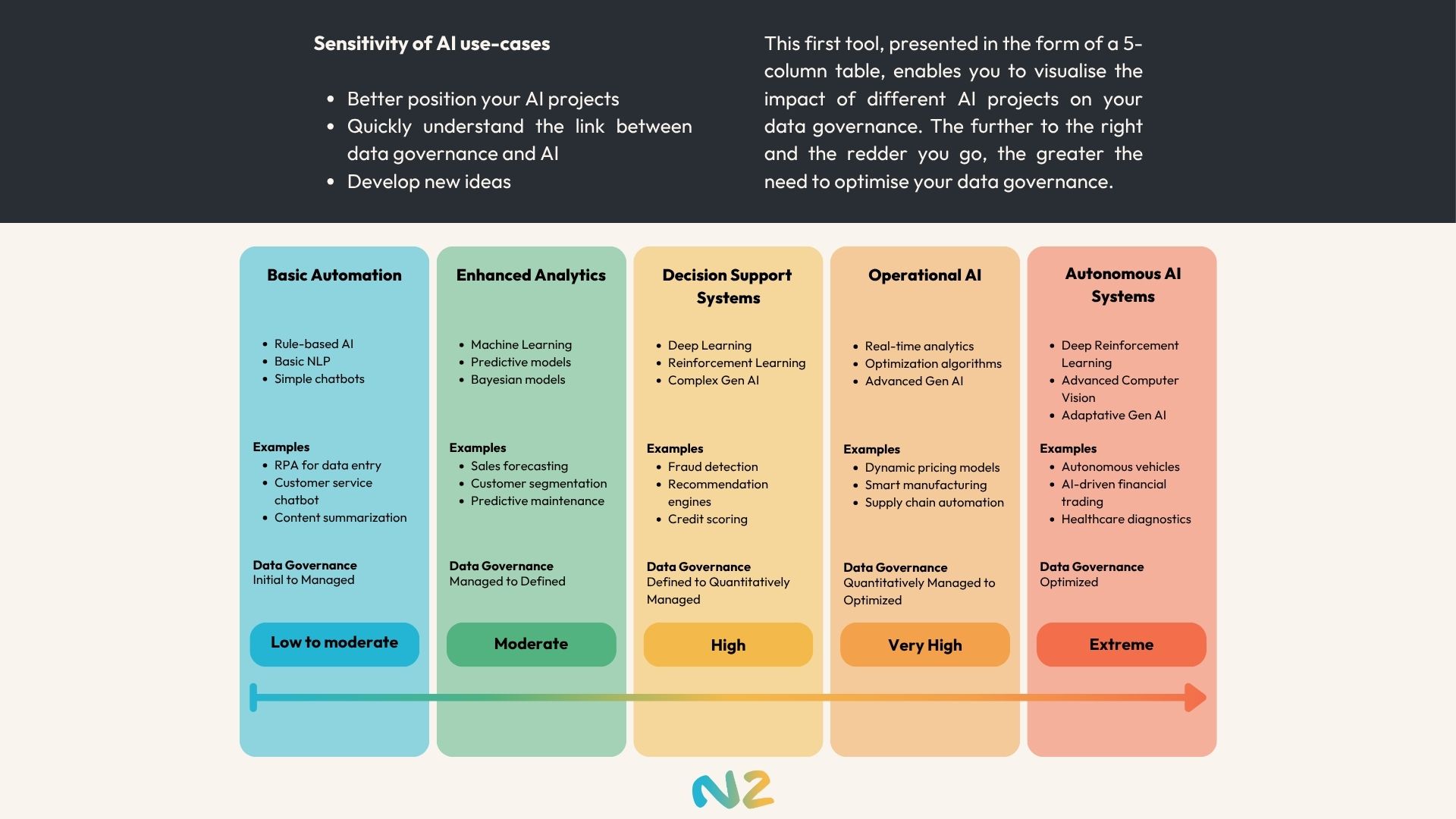

Not all AI use cases are created equal. Some are low-risk, high-reward no-brainers. Others? Not so much. Understanding where a use case sits on the AI maturity ladder can help you deploy intelligently and avoid nasty surprises.

Let’s break it down:

- Level 1 – Basic Automation: Think chatbots that answer FAQs or systems that sort incoming emails. Low complexity, low risk.

- Level 2 – Enhanced Analysis: Tools that summarize documents, categorize customers, or generate insights. Still human-supervised, but adding serious efficiency.

- Level 3 – Decision Support: AI flags suspicious transactions or suggests next-best actions for sales teams. Humans make final calls, but AI nudges decisions.

- Level 4 – Operational AI: Automated pricing engines or predictive maintenance systems that act without constant supervision. Higher risk, higher reward.

- Level 5 – Full Autonomy: Self-driving cars. Autonomous trading bots. These systems operate independently — and require bulletproof data and oversight.

The takeaway? Start where your organization is ready. Don’t force Level 5 ambitions on Level 2 infrastructure. Progress steadily, and let your success stories build your momentum.

Human vs. Machine: What Should Be Automated?

Just because something can be automated doesn’t mean it should be. One of the toughest challenges in adopting AI is knowing where to draw the line between human intuition and machine execution.

Sure, AI can draft emails, analyze reports, and even diagnose medical scans. But should it close a business deal? Fire an employee? Handle a high-stakes diplomatic negotiation? That’s where context and human nuance matter more than algorithms.

Humans bring empathy, judgment, and ethical reasoning to the table — qualities that even the most advanced AI struggles to replicate. Think of AI as an amplifier, not a replacement. It enhances our capabilities but doesn’t (yet) replace what makes us human. The best systems? They put people in the loop, not out of it.

Ethics and Consequences: When AI Gets It Wrong

Here’s a reality check: AI makes mistakes. Sometimes small, like recommending the wrong product. Sometimes massive, like flagging innocent users as fraudsters or misdiagnosing a health condition.

The difference? Reversibility.

AI ethics starts with understanding the stakes. If an AI-powered chatbot gives bad advice, the damage is minor. If an autonomous vehicle misjudges a situation, the consequences could be fatal. That’s why we must design AI systems with both foresight and fail-safes.

Organizations need clear guidelines: when to trust AI, when to double-check, and when to leave decisions to humans entirely. They also need transparency — users should know when they’re interacting with AI and what the risks are. Without this ethical grounding, even the most sophisticated system can become a liability.

Choosing the Right Infrastructure

Once you’ve nailed your use case and assessed the risks, it’s time to ask: where does your AI live? That’s not a philosophical question — it’s a practical one. The infrastructure you choose affects everything from performance to compliance.

Here are the three main options:

- Public Cloud APIs (e.g., OpenAI, Anthropic): Fast, easy, and powerful — but your data leaves your environment. That’s a dealbreaker for many in finance, healthcare, or government.

- Sovereign Providers (e.g., Mistral, Aleph Alpha): Hosted within Europe or your country, offering more control and regulatory alignment.

- On-Premise Deployments: The Fort Knox of AI setups. Your models, your servers, your rules. Maximum control, but also higher cost and complexity.

The golden rule?

Let the business case guide the tech. Don’t over-engineer a high-security system for a low-risk chatbot. Likewise, don’t cut corners on compliance just to launch quickly. Smart infrastructure choices start with smart questions.

The Case for AI Literacy

Here’s the truth: AI isn’t just for data scientists anymore. In today’s digital economy, everyone — from interns to executives — needs a basic grasp of how AI works, what it can do, and where it can go wrong.

This doesn’t mean turning every employee into a Python-wielding ML engineer. But it does mean building a shared language. Can your sales team understand how AI scores leads? Can your marketing team audit an AI-generated report? Can your leadership team evaluate AI risk?

AI literacy is about demystifying the black box. It empowers people to ask the right questions, spot red flags, and use AI tools effectively. And it’s especially vital for younger generations, who will grow up navigating a world shaped by intelligent systems. Just as digital literacy became a core skill in the 2000s, AI literacy is the next essential layer.

AI Agents and LLMs: What You Need to Know

Now let’s talk tech — just enough to keep you in the know at your next meeting. You’ve heard of LLMs (Large Language Models). They’re the brains behind tools like ChatGPT. These AI models are trained on massive text datasets and are really good at mimicking human language.

But what happens when you want that AI to do something — like cancel an order, book a meeting, or pull real-time data? That’s where agents come in. Think of an LLM as the mind, and an agent as the hands. An agent uses the LLM’s output to take action in the real (or digital) world.

These agents can be surprisingly autonomous. They can trigger workflows, manipulate databases, and even talk to other agents. As their capabilities grow, so does the need for strong safeguards. Because when a system goes from talking to doing, the stakes multiply.

Multi-Agent Ecosystems: Future or Fantasy?

What if AI systems could not only act — but collaborate? That’s the promise of multi-agent ecosystems. Picture this: you give a single prompt, and behind the scenes, a network of AI agents divvies up the tasks, talks to each other, and executes a complex process while you sip your coffee.

It’s not sci-fi. Tools like Crew AI and LangChain are already prototyping these capabilities. One agent fetches the data. Another analyzes it. A third sends the report. Layer on protocols like MCP (Multi-Agent Communication Protocol), and suddenly you’ve got a digital team that works in sync — faster and (sometimes) smarter than humans.

But here’s the catch: complexity. More agents mean more potential failure points. Coordination becomes crucial. Oversight becomes harder. As with any powerful system, the key is not just whether it can be done — but whether it should be, and how it’s governed.

Building AI-Ready Organizations

So, how do you prepare your organization for all this? The models, the data, the governance, the agents — it can sound overwhelming. But the roadmap to becoming AI-ready is surprisingly grounded.

Start with culture. Build a mindset that values experimentation but respects risk. Foster collaboration between IT, data, compliance, and business units. AI isn’t just a tech project — it’s an organizational transformation.

Then build the scaffolding: strong data governance, clear roles and responsibilities, and AI literacy across departments. Make sure you can measure success — not just in technical performance, but in business value.

Finally, think incrementally. Pilot. Learn. Scale. Don’t try to “AI-ify” everything overnight. Instead, identify high-impact, low-risk opportunities where AI can demonstrate its value. Success in one area will create momentum for others — and confidence across the board.

Conclusion

Generative AI isn’t a silver bullet. It’s not the end of work, nor the answer to all your business woes. But it is a powerful tool — and like all tools, its value depends on how, when, and why you use it.

Organizations that win with AI aren’t the ones chasing trends. They’re the ones asking the right questions, investing in foundational capabilities, and building with purpose. They respect the risks, but don’t fear the future.

So, is AI a bubble? Maybe. A mirage? In some contexts. But most importantly, it’s an opportunity — if you’re ready to engage with it strategically, ethically, and intelligently. The revolution is real. And it’s already begun.

As AI continues to evolve, particularly in countries like Canada where AI research and implementation are thriving, it’s crucial to stay informed about the latest developments. Whether you’re exploring ChatGPT in Canada or considering broader AI applications, remember that success lies in balancing innovation with responsible use. By focusing on data quality, privacy, and ethical considerations, organizations can harness the power of generative AI while navigating the complex landscape of AI regulations and policies.

FAQ

Data is crucial for AI, acting as high-quality fuel. Successful AI models rely on well-processed, clean, and structured data for accurate predictions.

Data governance ensures data reliability, security, and accessibility, building trust and effectiveness in AI systems, preventing digital chaos.

AI literacy empowers employees to understand AI’s capabilities and risks, enabling effective use and fostering a shared language within the organization.